HOW to prepare CKAD exam as of Sep 2021

1. Mantra to pass CKAD exam

Practice !!!

Practice !!!

Practice !!!

2. Purchase the exam voucher

Exam vouchers are valid for 1 year in general, but always go and verify below links as they may change from time to time. Once you registered you can reschedule exam too if exam schedule is not with in 24 hours. At the time of this writing, CKAD exams voucher regular cost is $375 USD, now and then they give discounts from 10% to 50% also.

https://www.cncf.io/certification/ckad/

Once you register exam, make sure you go through this exam handbook at least once. As it clears many of your exam related questions. Like what kind of browser supported (i.e. chrome), what extension to use and also rules and regulations.

https://docs.linuxfoundation.org/tc-docs/certification/lf-candidate-handbook

3.0 Curriculum

Official exam syllabus provided here. Be familiarize with the syllabus and prepare section wise. https://github.com/cncf/curriculum

I kept one week goal for each section to finish. This kind of goals will help you to prepare exam in a planned way.

3.1 Changes from Sep 28th 2021

Previous version for comparison:

Current Curriculum

New items included in changed curriculum are highlighted below. As exam has 19 questions or scenarios to complete in 2 hours, some questions are lengthy enough to take 1 minute to read. Time is challenge for the exam, it can be conquered only by practicing repeatedly until your fingers get muscle memory.

4.0 Make kubernetes.io as your browser home page. And download ckad exam browser favorites.

https://kubernetes.io/docs/home/

More familiar you are with this site, chances of passing exam are high. Think like this, they are allowing you carry this online guide with your exam and allowing to you copy officially and finish the exam (thanks to concept of open book exam, which help students not to memorize, but understand the concept)

ckad exam favorites are in reference section, get used to those favorite url. While practicing for exam try to reach required section quickly and copy the required yaml file quickly.

for you example if you need to create pv (persistant volume), then you need to go to “https://kubernetes.io/docs/concepts/storage/persistent-volumes/#persistent-volumes” and copy that yaml to your exam terminal and modify the yaml file.

5.0 you need a kubernetes environment to practice

5.1 Minikube

Best way to have practice environment is to setup minikube. Refer below my blog for instructions, if you use Linux os, I gave script to setup minikube

Note: for practicing network related modules, you need additional minikube modules

Following script will start minukube with 2 nodes (default it uses 1 node), 4 cores and 16gb. I use this higher configuration as my laptop has 8 core/32gb memory configuration. But 1 node with 1 core and 4 or 8 gb also works well.

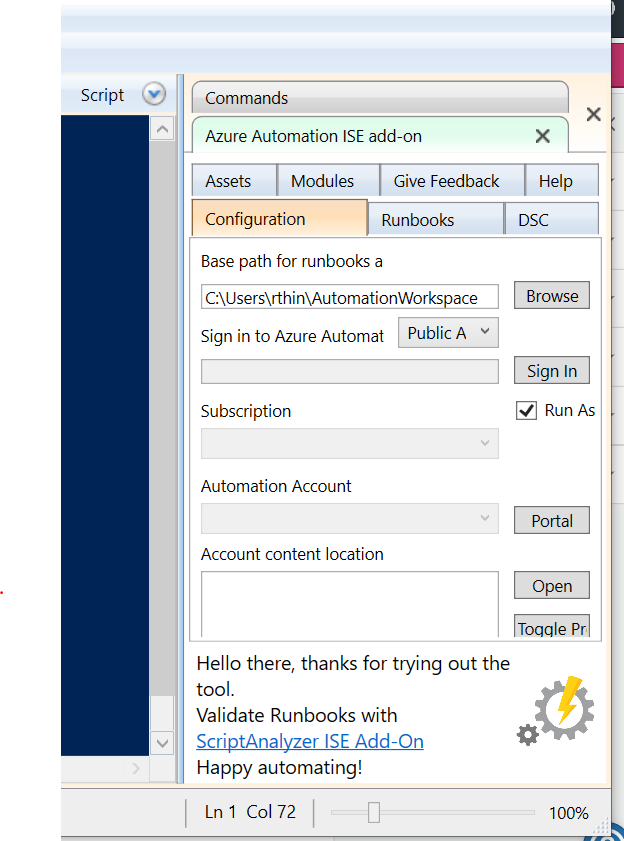

5.2 Practice environment provided by Linux foundation

if you purchase exam voucher and register at Linux foundation, you get access to kiler.sh exam simulator. Trust me it’s much tougher than real exam, so if you finis these in time, you can relax and attend exam with full confidence.

Same can be accessed from “https://killer.sh/dashboard” CKAD simulator using your linuxfoundation credentials. Does this inclusion of simulator impacted raise in exam price ??? as I remember exam voucher prices used to be around USD300.

5.3 KodeKloud labs (kodkloud )

Enroll in kodekloud is good idea as it became de facto standard in kubernetes training (it’s not marketing gimmick and I have no association with him. kodekloud became house hold name for those who start learning kubernetes. it gives you virtual environment to practice all you need is internet and browser. Before attending exam you need to finish both lightning labs. Consider this as pre exam qualification. If you unable to do it, better postpone your exam. )

5.4 CKAD-Practice-Questions

You need questions to practice and you get them here.

https://github.com/bbachi/CKAD-Practice-Questions

5.5 Katacoda virtual browser based environments

Katacoda offering some basic scenarios for free. It’s a good start to practice, as they give you solutions too. https://www.katacoda.com/courses/kubernetes

6.0 Exam tips

Following common tips for the exam are:

$alias k=kubectl

(For me I observed it’s already configured in exam terminal, check with k <enter> as soon as you start exam.

Export following two variables, will be very handy from time to time

export do=”--dry-run=client -o yaml”

export d=”--grace-period=0 –force”

When you delete a pod it’s default wait time is 30seconds. This 30 seconds is very precious. So practice to use variable like $d to delete a pod, by referring $d, will delete it immediately and saves you those 30seconds.

$k delete po testpod $d

7.0 Reference

7.1 Bookmarks

kubernetes

container portconfigmap all envconfigmap env variablecronjobenv variable simplejobliveness commandliveness httpreadiness commandresource cpurollout deprolling update yamlresource memorynetwork policynode selectornode affinitypvcpvserviceaccountsecret env variablesecret as all env varsecuritycontext usersecuritycontext capabitiesservice Nodeportservice clusteriptainttolerationvolume emptydirvolume configmapvolume secretvolume hostpathvolume with pvcAssigning Pods to Nodes | KubernetesConnecting Applications with Services | KubernetesKubectl Reference DocsPerform a Rolling Update on a DaemonSet – Kuberneteskubectl Cheat Sheet | KubernetesAssign CPU Resources to Containers and PodsConfigure Liveness, Readiness and Startup Probes | Kubernetesingress 1.18